...

When complete, it should look similar to what is shown below.

ibsnow.properties

mqtt_server_url = ssl://55.23.12.33:8883

mqtt_server_name = Chariot MQTT Server

#mqtt_ca_cert_chain_path =

#mqtt_client_cert_path =

#mqtt_client_private_key_path =

sequence_reordering_timeout = 5000

primary_host_id = IamHost

snowflake_streaming_client_name = MY_CLIENT

snowflake_streaming_table_name = SPARKPLUG_RAW

snowflake_notify_db_name = cl_bridge_node_db

snowflake_notify_schema_name = stage_db

snowflake_notify_warehouse_name = cl_bridge_ingest_wh

snowflake_notify_task_enabled = true

snowflake_notify_nbirth_task_delay = 10000

snowflake_notify_data_task_delay = 5000

|

Now, modify the file /opt/ibsnow/conf/snowflake_streaming_profile.json file. Set the following:

...

When complete, it should look similar to what is shown below.

snowflake_streaming_profile.json

{

"user": "IBSNOW_INGEST",

"url": "https://RBC48284.snowflakecomputing.com:443",

"account": "RBC48284",

"private_key": "MIIEwAIBADANBgkqhkiG9w0BAQEFAASCBKowggSmAgEAAoIBAQDN6NOoaoVVZSz/AIUohNn9oJThwDZg2/qASsIRYFjy0pSNKh+XsG6yp4kteII900lEgt5koroU+8oQrX7vnTI/69mvc5o+xJBfGogd+qcdw9tEEUZHEfBxBtlpZvfMY/HHyrilQBvWVrFqB3hYt9n15lE/wVi1LDII378yh2p+QcwEaKhKD1aWBYUlpOoA0d2214/UQiU6ytI18jJNPN3yQv9Jx3+/DRldlYh5fLIQ0AWbBqRnQoyLvLaYRIgynxDhrQpVtw8QN2M/XQErT3OxZzti7CKeI9M4xLchO3VZozsde5kcQwCIcop05PX6dtdSsqheQBRrhytf1K9GnfGdAgMBAAECggEBAKO8auLXoaMgS0GTlk98JSRL11gU0qj/BBmUWPIcXV7qGPqP7oNe5wfltW2VEGw9YVu7fUElLTeWaT4N2IyNwfGWiIm+MX+MKwmVPXwpX06J+ggMfIfzOfGG8sef+5hqOU8YYu/1JK2yTm3z9r0FpaqmNSGvi+y1ciwgUBfMGuC93pySQCHuNXkWw2njxvaltpOSm9H08aQtXsA+1JL31kP4WZAexk2EqzRzEka8hrGYfNIs9qQimKI9XznNoqmlSN6ZIO+A5e+kSUg0viyY/cZLwVj5FYV/wKN49WDDkB3dthCbx1Z1VuIw7rub9VU609eoTXDxEgMMBEDqbE5BXkECgYEA/ZsySGO2QouCihpPp0VtNrNh7PhA/OLch5zZt2iJBMhbjPn4SAyrzgi6lQc7b4oZOznOK5jOL4NQijt7SGz8rfrwfTd5Rl84lHlN7Cu3V0lBC8IN6JcXWBuTedmF7ShlL5ATbpXTsgaaqPm3H8VCS4fkoQ54bDZnCjI5/GtI5OkCgYEAz9pgvqXCXyJQxj5bM0uihZV3lZzvwpqlEuT0GvT9XqHM+LNKtf2kQ958qRq5Nh381oeRLyVbZTFrr0eNCCEA5YesbmxE7d/5vlWszfW5e70TUJEWbk0rrGNmqVUlAfEZKfK6ms87W3peHqqMyXqnmMwwecMl2c013XZaLKYxRpUCgYEAnWgHdKDXDkSTGG6uQ882sz3xqOiJRaz1XgK/qzPp35sQH9dDAE1FEZOfY0Ji5J8dfAIr8ilcyGbDxZiXs2NaDg5z1/RnhIMzlgwYjl6v5DBmfArNITEuXxR2m6mkk4eADl5pgTjjdVreAcVEoSaJOGI3SLO3kMrPd6enEAHy84kCgYEAsL2BjDtI1zpHsvqs9CY5URuybt7epPx4p2NWCmIN3Fz6/PL/8VZ3SlqyZ9zYZqMDLqxiENPULmzio03VJ3dg2swOHGsmBZtxMp6JbSyoBwbUmKp2h15JZ7GyRwSmjksj2Z6TfDYAxB1+UNc3Fc+dGXlvMup0kgpD5kfQD61Vsy0CgYEAn9QCQG+lcPG5GXXu3EAeVzqgy+gOXpyd4ys0fdssFF93AM+/Dd9F31sSSfdasEQ8+jFKjunEeQAOiecVQA4Vu9GGQAykrK/m8nD0zf02L1QpADTBA8TymkpD1yFEMo+T5DrZ24SRCl/zREb0hLn//ZOA=",

"port": 443,

"host": "RBC48284.snowflakecomputing.com",

"schema": "stage_db",

"scheme": "https",

"database": "cl_bridge_stage_db",

"connect_string": "jdbc:snowflake://RBC48284.snowflakecomputing.com:443",

"ssl": "on",

"warehouse": "cl_bridge_ingest_wh",

"role": "cl_bridge_process_rl"

}

|

Now the service can be restarted to pick up the new configuration. Do so by running the following command.

sudo /etc/init.d/ibsnow restart

|

At this point, IBSNOW should connect to the MQTT Server and be ready to receive MQTT Sparkplug messages. Verify by running the following command.

tail -f /opt/ibsnow/log/wrapper.log

|

After doing so, you should see something similar to what is shown below. Note the last line is 'MQTT Client connected to ...'. That denotes we have successfully configured IBSNOW and properly provisioned MQTT Server.

INFO|7263/0||23-06-29 20:19:32|20:19:32.932 [Thread-2] INFO org.eclipse.tahu.mqtt.TahuClient - IBSNOW-8bc00095-9265-41: Creating the MQTT Client to tcp://54.236.16.39:1883 on thread Thread-2

INFO|7263/0||23-06-29 20:19:33|20:19:33.275 [MQTT Call: IBSNOW-8bc00095-9265-41] INFO org.eclipse.tahu.mqtt.TahuClient - IBSNOW-8bc00095-9265-41: connect with retry succeeded

INFO|7263/0||23-06-29 20:19:33|20:19:33.280 [MQTT Call: IBSNOW-8bc00095-9265-41] INFO org.eclipse.tahu.mqtt.TahuClient - IBSNOW-8bc00095-9265-41: Connected to tcp://54.236.16.39:1883

INFO|7263/0||23-06-29 20:19:33|20:19:33.294 [MQTT Call: IBSNOW-8bc00095-9265-41] INFO o.eclipse.tahu.host.TahuHostCallback - This is a offline STATE message from IamHost - correcting with new online STATE message

FINEST|7263/0||23-06-29 20:19:33|20:19:33.297 [MQTT Call: IBSNOW-8bc00095-9265-41] INFO o.eclipse.tahu.host.TahuHostCallback - This is a offline STATE message from IamHost - correcting with new online STATE message

FINEST|7263/0||23-06-29 20:19:33|20:19:33.957 [Thread-2] INFO org.eclipse.tahu.mqtt.TahuClient - IBSNOW-8bc00095-9265-41: MQTT Client connected to tcp://54.236.16.39:1883 on thread Thread-2 |

Edge Setup with Ignition and MQTT Transmission

...

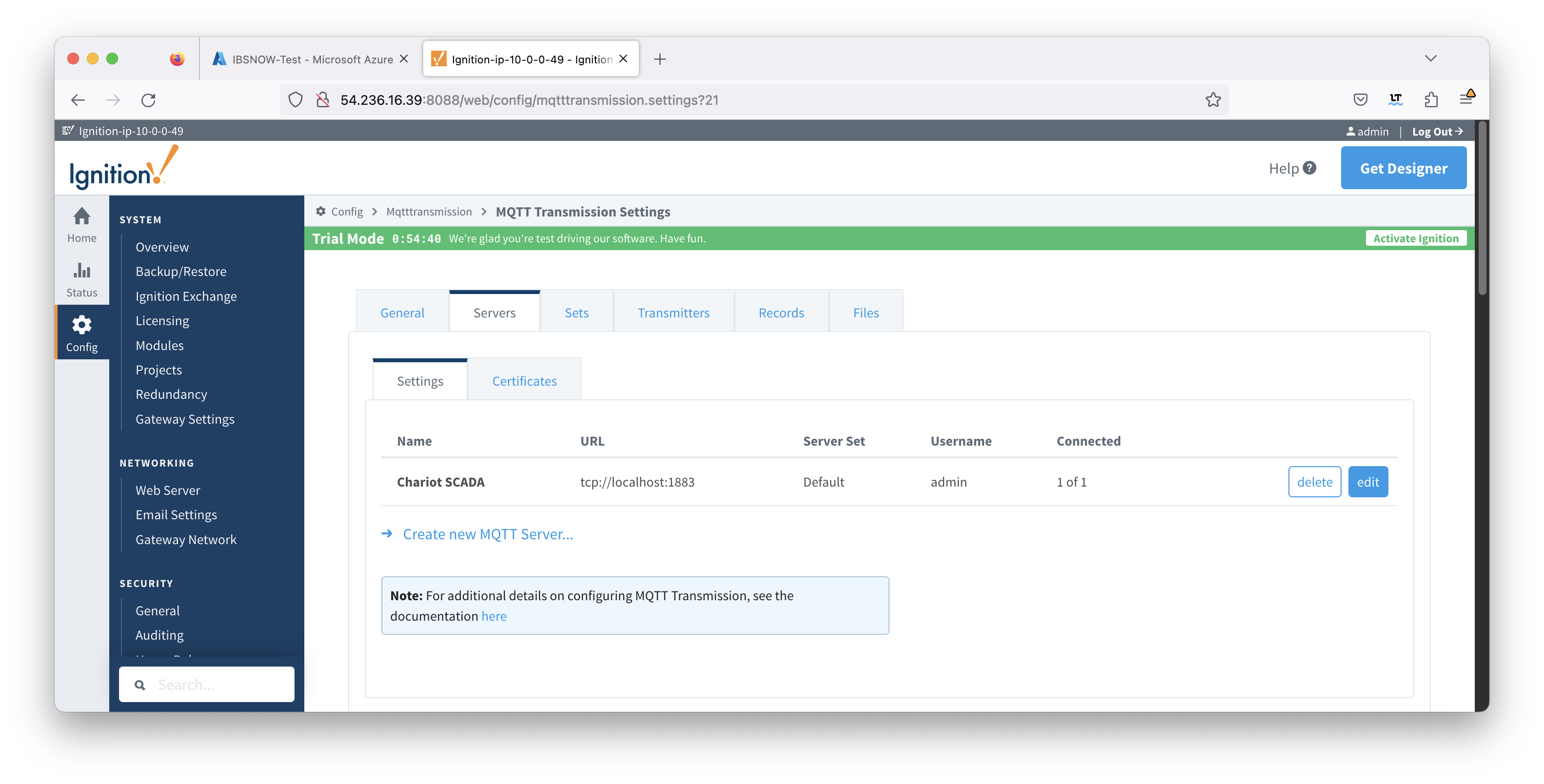

When complete, you should see something similar to the following. However, the 'Connected' state should show '1 of 1' if everything was configured properly.

Image Added

Image Added Image Removed

Image Removed

At this point, data should be flowing into Snowflake. By tailing the log in IBSNOW you should see something similar to what is shown below. This shows IBSNOW receiving the messages published from Ignition/MQTT Transmission. When IBSNOW receives the Sparkplug MQTT messages, it creates and updates asset models and assets in Snowflake. The log below is also a useful debugging tool if things don't appear to work as they should.

Successful Insert

FINEST|199857/0||23-04-21 15:46:22|15:46:22.951 [TahuHostCallback--3deac7a5] INFO o.e.tahu.host.TahuPayloadHandler - Handling NBIRTH from My MQTT Group/Edge Node ee38b1

FINEST|199857/0||23-04-21 15:46:22|15:46:22.953 [TahuHostCallback--3deac7a5] INFO o.e.t.host.manager.SparkplugEdgeNode - Edge Node My MQTT Group/Edge Node ee38b1 set online at Fri Apr 21 15:46:22 UTC 2023

FINEST|199857/0||23-04-21 15:46:23|15:46:23.072 [TahuHostCallback--3deac7a5] INFO o.e.tahu.host.TahuPayloadHandler - Handling DBIRTH from My MQTT Group/Edge Node ee38b1/PLC 1

FINEST|199857/0||23-04-21 15:46:23|15:46:23.075 [TahuHostCallback--3deac7a5] INFO o.e.t.host.manager.SparkplugDevice - Device My MQTT Group/Edge Node ee38b1/PLC 1 set online at Fri Apr 21 15:46:22 UTC 2023

FINEST|199857/0||23-04-21 15:46:23|15:46:23.759 [ingest-flush-thread] INFO n.s.i.s.internal.FlushService - [SF_INGEST] buildAndUpload task added for client=MY_CLIENT, blob=2023/4/21/15/46/rth2hb_eSKU3AAtxudYKnPFztPjrokzP29ZXzv5JFbbj0YUnqUUCC_1049_48_1.bdec, buildUploadWorkers stats=java.util.concurrent.ThreadPoolExecutor@32321763[Running, pool size = 2, active threads = 1, queued tasks = 0, completed tasks = 1]

FINEST|199857/0||23-04-21 15:46:23|15:46:23.774 [ingest-build-upload-thread-1] INFO n.s.i.i.a.h.io.compress.CodecPool - Got brand-new compressor [.gz]

FINEST|199857/0||23-04-21 15:46:23|15:46:23.822 [ingest-build-upload-thread-1] INFO n.s.i.streaming.internal.BlobBuilder - [SF_INGEST] Finish building chunk in blob=2023/4/21/15/46/rth2hb_eSKU3AAtxudYKnPFztPjrokzP29ZXzv5JFbbj0YUnqUUCC_1049_48_1.bdec, table=CL_BRIDGE_STAGE_DB.STAGE_DB.SPARKPLUG_RAW, rowCount=2, startOffset=0, uncompressedSize=5888, compressedChunkLength=5872, encryptedCompressedSize=5888, bdecVersion=THREE

FINEST|199857/0||23-04-21 15:46:23|15:46:23.839 [ingest-build-upload-thread-1] INFO n.s.i.s.internal.FlushService - [SF_INGEST] Start uploading file=2023/4/21/15/46/rth2hb_eSKU3AAtxudYKnPFztPjrokzP29ZXzv5JFbbj0YUnqUUCC_1049_48_1.bdec, size=5888

FINEST|199857/0||23-04-21 15:46:24|15:46:24.132 [ingest-build-upload-thread-1] INFO n.s.i.s.internal.FlushService - [SF_INGEST] Finish uploading file=2023/4/21/15/46/rth2hb_eSKU3AAtxudYKnPFztPjrokzP29ZXzv5JFbbj0YUnqUUCC_1049_48_1.bdec, size=5888, timeInMillis=292

FINEST|199857/0||23-04-21 15:46:24|15:46:24.148 [ingest-register-thread] INFO n.s.i.s.internal.RegisterService - [SF_INGEST] Start registering blobs in client=MY_CLIENT, totalBlobListSize=1, currentBlobListSize=1, idx=1

FINEST|199857/0||23-04-21 15:46:24|15:46:24.148 [ingest-register-thread] INFO n.s.i.s.i.SnowflakeStreamingIngestClientInternal - [SF_INGEST] Register blob request preparing for blob=[2023/4/21/15/46/rth2hb_eSKU3AAtxudYKnPFztPjrokzP29ZXzv5JFbbj0YUnqUUCC_1049_48_1.bdec], client=MY_CLIENT, executionCount=0

FINEST|199857/0||23-04-21 15:46:24|15:46:24.301 [ingest-register-thread] INFO n.s.i.s.i.SnowflakeStreamingIngestClientInternal - [SF_INGEST] Register blob request returned for blob=[2023/4/21/15/46/rth2hb_eSKU3AAtxudYKnPFztPjrokzP29ZXzv5JFbbj0YUnqUUCC_1049_48_1.bdec], client=MY_CLIENT, executionCount=0

|

Data will also be visible in Snowflake at this point. See below for an example. By changing data values in the UDT tags in Ignition DDATA Sparkplug messages will be produced. Every time the Edge Node connects, it will produce NBIRTH and DBIRTH messages. All of these will now appear in Snowflake with their values, timestamps, and qualities

...

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()